9 advance topics for deploying and managing Kubernetes containers

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available. In this article, we review 9 advance topics that all junior to senior Kubernetes system admin must know.

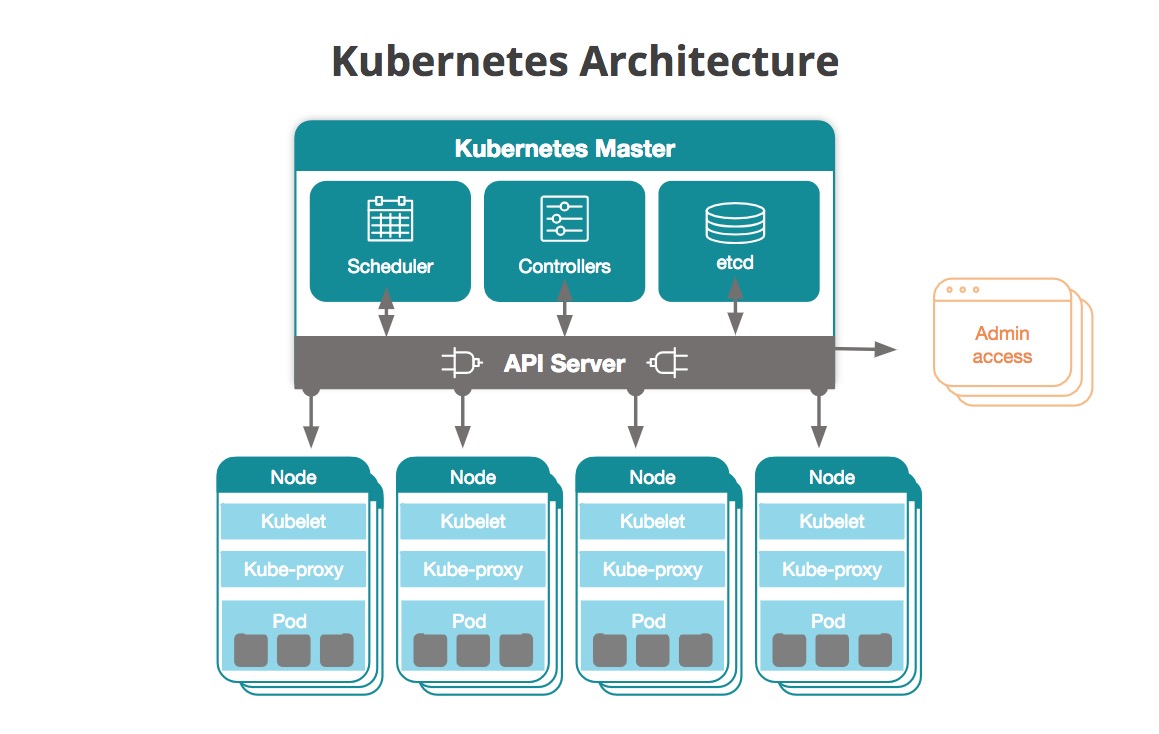

For those who are not familiar with Kubernetes and container technology, reading Overview of Kubernetes evolution from virtual servers and Kubernetes architecture is highly recommended. A solid understanding of microservices or how to migrate from monolithic to microservices is crucial for doing advance work on Kubernetes containers. Here is a good article to learn more. Also, reading and understanding Review of 17 essential topics for mastering Kubernetes is a prerequisite of this article.

To do advance work as a system admin, you need to know how to use kubeconfig to manage different clusters. Then, we will work on computing resources in nodes. Kubernetes provides a friendly user interface that illustrates the current status of resources, such as deployments, nodes, and pods. You should learn how to build and administrate it. Next, you should learn how to work with the RESTful API that Kubernetes exposes. It will be a handy way to integrate with other systems. Finally, you should learn how to build a secure cluster by setting up authentication and authorization in Kubernetes.

1- Advanced settings in kubeconfig

kubeconfig is a configuration file that manages cluster, context, and authentication settings in Kubernetes, on the client side. Using the kubeconfig file, we are able to set different cluster credentials, users, and namespaces to switch between clusters or contexts within a cluster. It can be configured via the command line using the kubectl config subcommand or by updating a configuration file directly.

2- Setting resources in nodes

Computing resource management is very important in any infrastructure. You should know your application well and preserve enough CPU and memory capacity to avoid running out of resources. Specifically, you should know how to manage node capacity in Kubernetes nodes as well as pod computing resources.

Kubernetes has the concept of resource Quality of Service (QoS). It allows an administrator to prioritize pods to allocate resources. Based on the pod’s setting, Kubernetes classifies each pod as one of the following:

- Guaranteed pod

- Burstable pod

- BestEffort pod

The priority is Guaranteed > Burstable > BestEffort. For example, if a BestEffort pod and a Guaranteed pod exist in the same Kubernetes node, and that node encounters CPU problems or runs out of memory, the Kubernetes master terminates the BestEffort pod first.

3- Playing with WebUI

Kubernetes has a WebUI that visualizes the status of resources and machines, and also works as an additional interface for managing your application without command lines.

4- Working with the RESTful API

Users can control Kubernetes clusters via the kubectl command; it supports local and remote execution. However, some administrators or operators may need to integrate a program to control the Kubernetes cluster. Kubernetes has a RESTful API that controls Kubernetes clusters via an API, similar to the kubectl command. You should learn how to manage Kubernetes resources by submitting API requests.

5- Working with Kubernetes DNS

When you deploy many pods to a Kubernetes cluster, service discovery is one of the most important functions, because pods may depend on other pods but the IP address of a pod will be changed when it restarts. You need to have a flexible way to communicate a pod’s IP address to other pods. Kubernetes has an add-on feature called kube-dns that helps in this scenario. It can register and look up an IP address for pods and Kubernetes Services. You should learn how to use kube-dns, which gives you a flexible way to configure DNS in your Kubernetes cluster.

6- Authentication and authorization

Authentication and authorization are both crucial for a platform such as Kubernetes. Authentication ensures users are who they claim to be. Authorization verifies if users have sufficient permission to perform certain operations. Kubernetes supports various authentication and authorization plugins.

7- Logging and monitoring

Logging and monitoring are two of the most important tasks in Kubernetes. However, there are many ways to achieve logging and monitoring in Kubernetes, because there is a lot of logging and monitoring open source applications, as well as many public cloud services. Kubernetes has a best practice for setting up a logging and monitoring infrastructure that most Kubernetes provisioning tools support as an add-on. In addition, managed Kubernetes services, such as Google Kubernetes Engine, integrate GCP log and a monitoring service out of the box.

8- Working with EFK

In the Container world, log management always faces a technical difficulty, because Container has its own filesystem, and when Container is dead or evicted, the log files are gone. In addition, Kubernetes can easily scale out and scale down the Pods, so we need to care about a centralized log persistent mechanism. Kubernetes has an add-on for setting up centralized log management, which is called EFK. EFK stands for Elasticsearch, Fluentd, and Kibana. These applications’ stack brings you a full function of log collection, indexing, and UI.

9- Monitoring master and node

Imagine that you have learned how to build your own cluster, run various resources, enjoy different usage scenarios, and even enhance cluster administration. Now, here comes a new level of perspective for your Kubernetes cluster which is called monitoring. Through the monitoring tool, users will not only learn about the resource consumption of nodes, but also the Pods. This will help us to have greater efficiency as regards resource utilization.

Summary

In this article, we reviewed the 9 advance topics that are essential for managing cloud applications and servers with complex settings and requirements. You can move on to learn blockchain development or learn Kubernetes deployment on a specific enterprise platform like RedHat OpenShift.